Gemini from Google brings a significant advancement in modern AI system development. Two key variants emerging are Gemini 2.5 Flash-Lite and Gemini 2.5 Pro.

These models differ fundamentally not only in performance characteristics but primarily in how they can be used in development - especially if you prefer so-called vibe coding, an approach where development doesn't follow a strict structure but is driven by iteration, context, and intuitive work with the model.

Unlike traditional linear code writing, vibe coding emphasizes continuous testing, adaptation, leveraging model context, and parallel work with variables and outputs. Gemini 2.5 offers developers two paths: either the extremely fast Flash-Lite or the deeply contextual and computationally intensive Pro model.

Technical Parameters and Development Options

| Parameter |

Gemini 2.5 Flash-Lite |

Gemini 2.5 Pro |

| Context limit |

1 million tokens |

1-2 million tokens |

| Thinking mode |

Can be enabled, default off |

Active, managed compute budget |

| Supported modalities |

Text, images, video, audio, URL |

Extended multimodality including PDF |

| Latency |

Very low |

Medium |

| Price (input/output) |

~$0.10 / ~$0.10 |

$1.25 / $10.00 |

Flash-Lite is particularly suitable for applications where you need to iterate quickly, debug agent logic, or test system responses in real-time. In contrast, the Pro model is designed for complex scenarios requiring deep understanding, long-term memory, and sophisticated data flow and decision management.

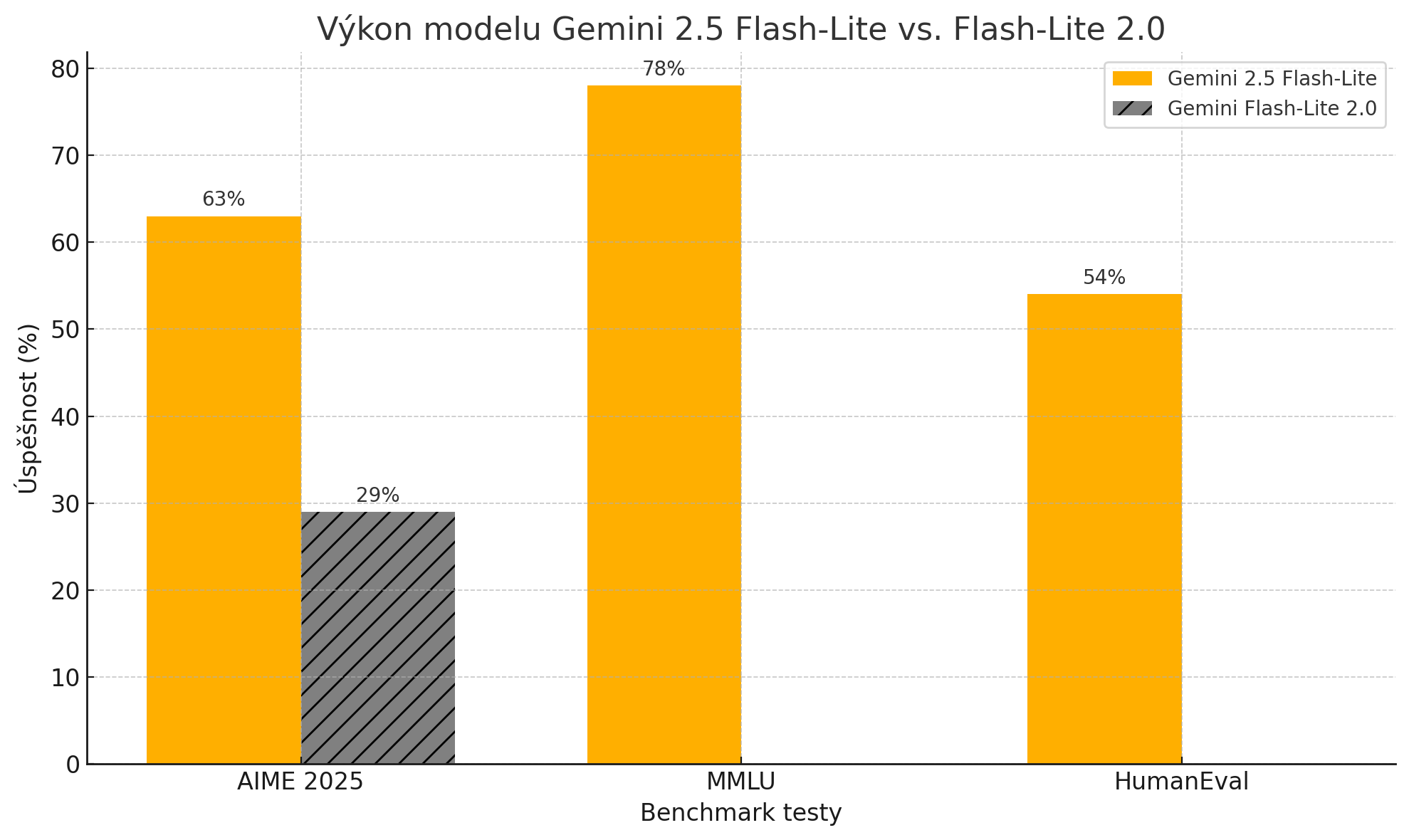

Benchmark Results

The following table presents performance metrics of the new Gemini models compared to their predecessors across key standardized

AI tests:

| Test |

Gemini 2.5 Flash-Lite |

Flash-Lite 2.0 |

Gemini 2.5 Pro |

| AIME 2025 |

63% |

29% |

88% |

| MMLU |

78% |

- |

84% |

| HumanEval |

54% |

- |

90% |

| GPQA / MMMU |

- |

- |

86% / 83% |

What Do Individual Benchmarks Measure?

AIME (American Invitational Mathematics Examination) tests models' ability to solve advanced high school-level mathematical problems. The increase from 29% to 63% for Flash-Lite 2.0 documents significant improvement in mathematical reasoning and logical thinking.

MMLU (Massive Multitask Language Understanding) evaluates model knowledge across 57 different areas including science, history, law, and medicine. The 78% result for Flash-Lite and 84% for the Pro version confirms the broad knowledge base of both models.

HumanEval measures the ability to generate functional programming code. While Flash-Lite achieves a solid 54%, the Pro model with 90% success rate demonstrated excellent capabilities in coding and software development.

GPQA (Graduate-Level Google-Proof Q&A) and

MMMU (Massive Multi-discipline Multimodal Understanding) test advanced multimodal understanding and solving complex problems at university level. The Pro model excels here with results of 86% and 83%.

Results Interpretation

These results clearly show that Flash-Lite 2.0 is significantly more powerful than its previous version - especially in mathematical reasoning, where performance more than doubled. This makes the model well-suited for everyday application development, automating routine tasks, and rapid prototyping of solutions where speed and efficiency are emphasized while maintaining sufficient accuracy.

The Pro model excels in tasks requiring sophisticated thinking, long-term planning, and interpretation of diverse input data. Its high score in coding tests (90% in HumanEval) makes it an ideal partner for complex development projects. Excellent results in GPQA and MMMU benchmarks confirm its ability to work with multimodal data and solve problems requiring deep contextual understanding.

The choice between Flash-Lite 2.0 and Pro version depends on specific project requirements - Flash-Lite offers an excellent performance-to-speed ratio for common tasks, while the Pro version is designed for the most demanding applications requiring maximum accuracy and sophisticated analytical capabilities.

When to Use Which Model from a Developer's Perspective

Gemini 2.5 Flash-Lite is suitable when:

- you need a fast-responding environment for prototyping,

- you work in tools like VS Code, Replit, or use context variables,

- you prefer frequent execution and testing without significant latency,

- you focus on chatbots, summarization, translations, or simpler tools with reactive behavior.